Monte Carlo Method

Monte Carlo Forecasting is a method for creating predictive forecasts based on a technique of repeatedly running random simulations of the samples to see a range of possible outcomes, and then to use those outcomes to forecast results of larger (or future situations). The predictive model offers a percentage probability of being in certain ranges.

It can be a very effective tool for statistical analysis of data, and there has been a surge in it’s use in Software delivery Forecasting. It can be a useful tool even with small sample sizes but it relies on three very crucial premises.

- The sample data MUST be reflective of the forecast situation,

- The data must be also be statistically independent (results of one event does not impact another).

- The greater the sample size the more reliable the simulation will be.

Monte Carlo Fallacy

Rather amusingly there is also a psychological condition called the Monte Carlo Fallacy where we assume past results impose a probabilistic bias on future events. “The last 5 spins of a roulette wheel came up Black therefore the next is more likley to be Red as the odds must balance out.”

That is the fallacy. In a fair roulette wheel the odds of being black or red never change no matter how many times you get one result, any combination of outcomes has the same odds as any other. In fact it is far more likely that the wheel has a physical bias towards Black than the odds righting themselves, probability has no memory.

Applying the Method to the Fallacy

Amusing as it is the Monte Carlo Fallacy is the act of using observation to misinterpret probabalistic based statistics assuming the probabilities have memory, and the Monte Carlo Method is using observed events to create probabalistic forecasts by assuming (often dependent) events have independence.

Just as a point of interest. If you used the Monte Carlo Method for gambling on ‘fair’ Roulette Wheels you would likely have no difference to any other ‘system’ – probability cannot be influenced, it would simply be using a ‘method’ to compound your Fallacy.

However, in theory the method could be used to identify faulty Roulette Wheels or other unusual variations from probabilistic results (e.g. to spot cheating), or to predict gamblers’ behaviour.

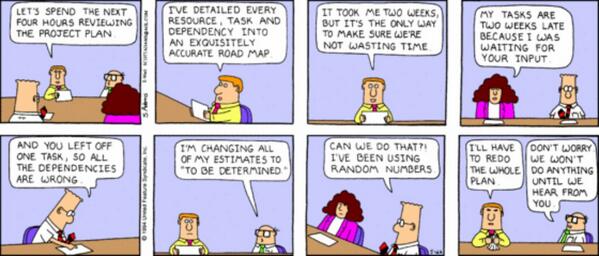

Applying precision to inaccurate data is dysfunctional behavior. It is using the fog of precision to create an illusion of accuracy.

The Fallacy of the Monte Carlo Method

As you have probably surmised I have some grave concerns about using the Monte Carlo Method for forecasting software delivery. In my opinion the Monte Carlo Method applies a huge degree of precision to an inaccurate forecast. And applying precision to inaccuracy is dysfunctional behavior. It feels to me that we are using the fog of precision to create an illusion of accuracy.

The weaker the data available upon which to base one’s conclusion, the greater the precision which should be quoted in order to give the data authenticity.

Norman Ralph Augustine

Applying to Software Development

In software projects we tend to apply the reverse of the Monte Carlo Fallacy, we make the assumption that the past accurately predicts the future, so accurately we can give a percentage confidence level. By doing so we are making certain assumptions.

The sample data MUST be reflective of the forecast situation.

- Future stories are similar in size, scope, complexity, and effort to the sample data. E.g. early stories are similar to later stories.

- Future stories are impacted similarly by external events (we don’t learn or fix problems)

- Our ability to do the work is constant

- The team does not change, either in size or skill set

- The team’s ability to work together does not improve or degrade

The sampledata must be also be statistically independent (results of one event does not impact another).

- The future work is not made easier by learning from previous work

- The future work is not made harder by adding to a growing code base

- We are not creating more rework later than we did at the beginning

- Testing, feedback or support do not change as product grows or ages.

- We do not improve our skills, or knowledge of the domain.

- We do not mitigate problems to prevent recurrence.

- We do not learn.

Would you feel comfortable giving that list of caveats along with a Monte Carlo forecast?

Where does Monte Carlo Work?

Monte Carlo Method and the simulations have many useful applications, googling brings up a whole bunch of options, but it works best where observations of sample data can be used to predict behavior. As an example: parcel delivery time, whilst it may change over time it will likely be static enough to predict times based on certain volumes. Or to set reasonable SLAs for an IT help desk where the work is likely consistent in variation.

Where does Monte Carlo NOT Work?

Where it does not work very well is in situations where the sample is small or is not likely reflective of the event being forecasted. Or where past events influence future events, if you learn, or improve or grow, or become over loaded or congested

Monte Carlo magnifies the significance of the sample data. Say you took a sample of 10 items and a 1 in a 100 event occurred Monte Carlo would apply a 1 in 10 significance to that event despite it being 1 in a 100. In software terms an unusually large story or small story or abnormal blocking event can throw off the results by magnifying the significance.

Quick example,

I ask 100 people to solve a puzzle and measure the time each takes.

If I use Monte Carlo forecasting based on the results, it will likely give me a pretty good projection of how the next 100 people will take to complete the puzzle.

If I apply Monte Carlo to forecast how long it will take those same 100 people to complete the same puzzle again it will likely get it completely wrong as some of them will likely get quicker learning from the first attempt.

Forecasting is Hard

The problem is that in most cases Software delivery is uncertain, most software products are complex and the complexity varies from story to story. Work varies, early stories differ in composition, size and scope from later stories, we don’t order work in a manner that balances effort or delivery time, we order based on maximising value. Work evolves, we respond to feedback and we update. We learn, the first time we see a particular problem we may struggle but the next time it is a breeze. As a consequence forecasting is very very hard to do.

NoEstimates helped a lot, we discovered that upfront estimating stories only gave us a marginal gain in accuracy for an upfront cost, and that by simply counting stories and measuring throughput we can get an adequate level of predictability, if we accept and understand the limitations.

Whenever I see a forecast written out to two decimal places, I cannot help but wonder if there is a misunderstanding of the limitations of the data, and an illusion of precision.

Barry Ritholtz

Accurate Forecasting of Software Products is Snake Oil

I am being a touch cynical but it is my experience that in most cases of people using Monte Carlo Simulations for software delivery forecasting it is being used by people that do not fully understand the tool, and the results are being presented to people that do not understand the limitations.

- I see people repeatedly tweak the settings until they get the answer they want,

- others excluding data they don’t like

- others making forecasts based on sample sizes of 6 stories

- Many more based on backlogs or stories that include work that will be broken down at the point the work starts

- or on backlogs excluding the possibility of new work being added (customer requests or bugs)

And those receiving the predictions believe that when the results say that there is a 90% confidence of hitting a particular date that they are completely unaware and uninformed of the assumptions behind that 90% figure or how it is calculated and there will be a great deal of expectation management needed to fix it later.

K.I.S.S.

I much prefer a simple moving average based on story count, nearly anyone can understand the numbers and the expectations and assertions of precision are absent so the expectation of accuracy is reduced. Good healthy conversation is invited about what might impact the product and it is very easy to see what could be done if the resulting forecast is beyond expectations.

Other than for a desire to dazzle someone with smoke and mirrors or to create false confidence, I struggle to see many situations where Monte Carlo adds any value over and above that which can be achieved with simple calculations. For me there is far more value in everyone understanding the calculation and limitations.

Final word

In the right context and for the right audience the Monte Carlo Method and the simulations are hugely valuable and can be used to great effect. But ensure you understand how and when to use them.

A forecast is only useful if it is data that can be used to make an informed decision to take action.

If you do not fully understand how Monte Carlo Simulations work (including the assumptions and limitations), OR if those you are presenting to, do not fully understand how Monte Carlo Simulations work or it’s limitations then be wary that you are not simply baffling your audience with graphs rather than presenting them with valuable information they can act on. They could be making ill-informed decisions.